- Home

- > The Marketix Blog

- > SEO

Addressing Duplicate Content Issues in SEO

Duplicate content is a common issue in SEO that can significantly impact your site's visibility. It refers to identical or substantially similar content appearing on multiple URLs.

Search engines might need help determining which version to rank, leading to lower search rankings and diluted link equity.

Addressing this issue is important for maintaining a strong SEO presence. Let’s examine the causes of duplicate content, how to identify them, and the solutions to resolve these issues effectively.

Causes, Identification, and Solutions for Duplicate Content

URL Parameters

URL parameters often lead to duplicate content issues. Parameters like session IDs, tracking codes, and sort orders create multiple URLs with similar content.

To identify duplicate content caused by URL parameters, use tools such as Screaming Frog SEO Spider and Google Search Console. These tools can crawl your site and list all the URLs. Look for URLs with parameters that point to the same content.

Solution:

Implement canonical tags to consolidate the URL parameters into a single preferred URL. This tells search engines which version of the URL should be indexed.

Additionally, ensure your website’s internal linking structure points to the canonical URLs to reduce the chances of duplicate content issues caused by URL parameters.

You can use Screaming Frog to regularly audit your site to catch any new issues early.

A proactive approach will keep your SEO efforts on track.

Session IDs

Session IDs can cause duplicate content by appending unique identifiers to each user session URL. This results in multiple URLs for the same content.

Both URLs display the same content but are treated as separate pages by search engines. Basically, there are multiple versions of the same content being indexed, harming your SEO.

To spot duplicate content from session IDs, use Google Search Console or site crawling tools like Screaming Frog SEO Spider. Examine the URLs and identify patterns where session IDs are appended. This helps in pinpointing the problem.

Solution:

Configure your server to avoid using session IDs in URLs—instead, store session data in cookies.

If avoiding session IDs isn't possible, use canonical tags (Recommended) to indicate the preferred version of the URL to search engines. This ensures that search engines index only one version of the content.

Printer-Friendly Versions

Printer-friendly versions of web pages can create duplicate content by providing alternative URLs with the same content formatted for printing.

Manually search for printer-friendly versions on your site or use site audit tools to find URLs ending with “/print” or similar. Check for significant content overlap between these URLs and their original versions.

Solution:

Use canonical tags on printer-friendly pages to point to the main content page. Alternatively, you can implement meta robots noindex tags on printer-friendly pages, preventing them from being indexed.

Another option is to provide a print CSS for the main page, eliminating the need for a separate URL.

Content Syndication

While this practice can significantly broaden your reach and increase your audience, it often leads to duplicate content issues that can negatively impact your SEO.

Search engines may struggle to determine which version of the content is the original, leading to possible ranking penalties.

To monitor syndicated content, use tools like Copyscape. Copyscape allows you to track where your content appears across the web by comparing your original content with versions found on other sites. Google Alerts can also be set up for specific phrases unique to your content, providing notifications whenever your content appears elsewhere online.

Solution:

One effective solution to manage syndicated content is to use rel="canonical" tags on the republished pages. These tags indicate to search engines that the content is a duplicate and point them to the source. By implementing canonical tags, you help search engines understand which version of the content should be indexed and ranked, thus preserving your SEO integrity.

Alternatively, you can ensure that each republished piece includes a link back to the original content with proper attribution. It's important to communicate with the syndicating websites to make sure that they follow this practice consistently.

Scraped or Copied Content

Scraped or copied content occurs when other websites copy your content without permission, diluting your content’s value, negatively impacting your search engine rankings, and even leading to penalties from search engines.

To identify scraped or copied content, use tools like Copyscape to scan the internet for copies of your content. Copyscape compares your original content with what it finds online, highlighting any matches. Manually checking websites that look suspicious can also help identify if your content has been stolen.

Once you identify instances of scraped or copied content, the first step is to contact the offending website’s owner and request the removal of your content. Be clear and firm in your communication, citing copyright infringement as the reason for your request. If this approach fails, you can file a DMCA (Digital Millennium Copyright Act) takedown request. This legal procedure can force the removal of your stolen content from search engines and the offending website.

It is advisable to regularly monitor your content to catch new instances of scraping as soon as they occur. Staying vigilant and proactive is key to maintaining the integrity of your content and preserving your search engine rankings.

By combining legal, technical, and monitoring strategies, you can effectively manage and mitigate the impact of scraped or copied content on your website.

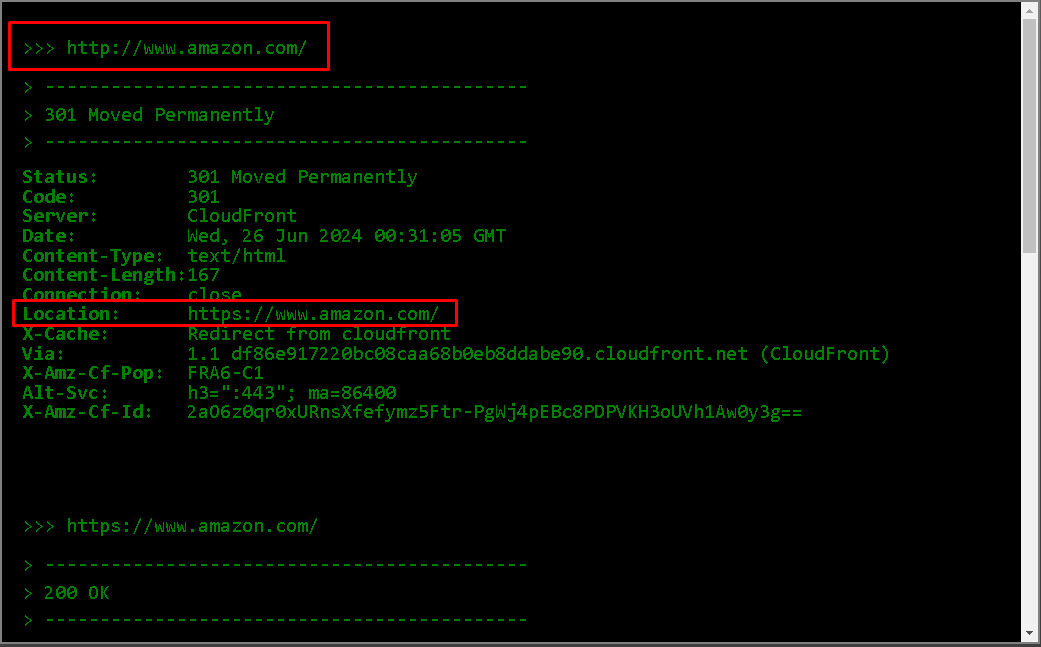

HTTP vs. HTTPS Versions

Having both HTTP and HTTPS versions of your website can lead to duplicate content issues. Search engines may index both versions, causing confusion and diluting your content’s value.

To identify duplicate content caused by HTTP and HTTPS versions, use Google Search Console. This tool helps you find and compare the indexed versions of your site. Ensure you check for both HTTP and HTTPS versions in the search console to see which URLs are being indexed.

Solution:

To solve this issue, implement 301 redirects from HTTP to HTTPS. This tells search engines that the HTTPS version is the preferred and secure version of your site.

Setting your preferred domain in Google Search Console can further help in consolidating the versions as it allows you to tell search engines to index only the secure version of your content, improving your site’s SEO and providing a better user experience.

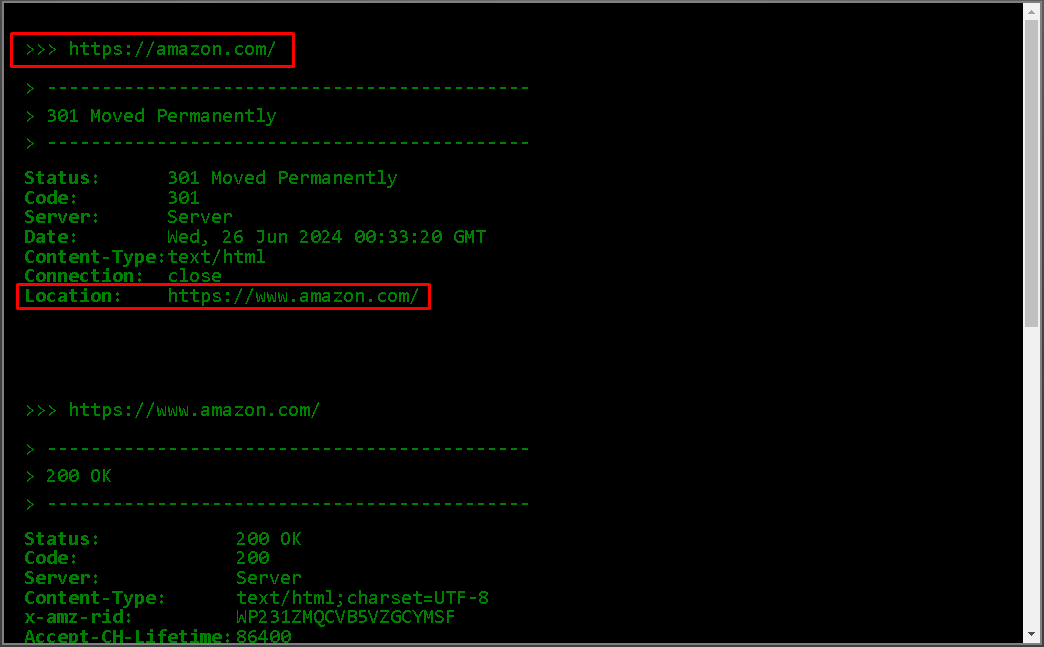

www vs. non-www Versions

Similar to the HTTP and HTTPS issues, having both www and non-www versions of your site can create duplicate content problems. Search engines may treat these as separate sites, which can split your content’s ranking power.

To identify this issue, use tools like Screaming Frog SEO Spider or Google Search Console. These tools will help you spot whether both versions are being indexed and identify duplicate content resulting from this split.

Solution:

Implement 301 redirects to consolidate your preferred version, whether it’s www or non-www. This directs all traffic to the chosen version and signals to search engines which version to index.

Setting your preferred domain in Google Search Console also helps in ensuring consistency. This approach prevents search engines from indexing both versions, maintaining the integrity and ranking power of your content.

Variations of the Same Content

Variations of the same content across different URLs can arise from minor changes in URLs, such as added parameters or slight alterations. This creates duplicate content, confusing search engines and harming your rankings.

To identify such variations, conduct a thorough audit using tools like Screaming Frog SEO Spider. These tools help you list all URLs and identify those with minor variations leading to the same content. Manually comparing these URLs can also help in identifying such issues.

Solution:

Use canonical tags to indicate the preferred version of your content. Ensure that your internal linking structure consistently points to the canonical URLs. This practice reduces the risk of creating duplicate content through URL variations and consolidates your content’s ranking signals, improving overall SEO performance.

Regular audits and vigilant monitoring are essential to catch and address new instances of URL variations. By maintaining a consistent URL structure and using canonical tags effectively, you can prevent duplicate content issues and ensure that your site remains optimised for search engines.

Preventing Future Duplicate Content

Preventing future duplicate content requires a proactive and consistent approach.

Regular audits are essential. Use tools like SEMrush and Google Search Console to periodically check your site for duplicate content. These tools help identify issues before they impact your SEO. Schedule these audits to ensure your content remains unique and properly indexed.

Configuring your CMS settings correctly can prevent many duplicate content issues. Make sure your CMS handles URL parameters properly and generates consistent URL structures. Implement canonical tags automatically for new content. Properly configured CMS settings reduce the risk of duplicate content arising from technical issues, providing a solid foundation for your SEO strategy.

Creating unique, high-quality content is important. Avoid thin content that offers little value. Each piece should provide unique insights or information. This not only prevents duplication but also enhances user engagement and search engine rankings. Encourage your content creators to focus on originality and depth, so that your website consistently delivers valuable and unique content to its audience.

Final Thoughts

Addressing duplicate content is essential for maintaining a strong online presence. By identifying and resolving these issues promptly, you safeguard your website's integrity and enhance its search engine performance.

For expert assistance with managing duplicate content and other technical SEO challenges, consider Marketix Digital. As the leading SEO Agency in Sydney, we provide comprehensive SEO services to help your business achieve optimal search engine performance.

Recent Posts

Free Download SEO Book

Download our 24-page SEO book to learn:

- How SEO Really Works

- How to Rank #1

- Content & SEO

- Choosing an SEO Agency

Thank you!

You have successfully joined our subscriber list.

Recent Posts