- Home

- > The Marketix Blog

- > SEO

A Guide to Robots.txt for SEO

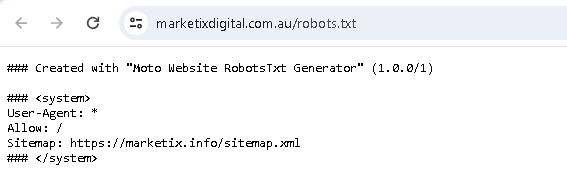

One important thing that you shouldn't overlook in your SEO strategy is the robots.txt file. This file tells search engine crawlers which parts of your site they can access.

Properly configuring your robots.txt file can help manage crawler traffic and make sure that only the most important pages are indexed.

What is a Robots.txt File?

A robots.txt file is a simple text file located in the root directory of your website.

It provides instructions to search engine crawlers about which pages or files they can or cannot request from your site. These instructions are known as directives and help control the flow of crawler traffic.

The primary purpose of the robots.txt file is to prevent overloading your server with requests. By specifying certain pages or files to be excluded from crawling, you can optimise your site's performance and make sure that the most important content is prioritised.

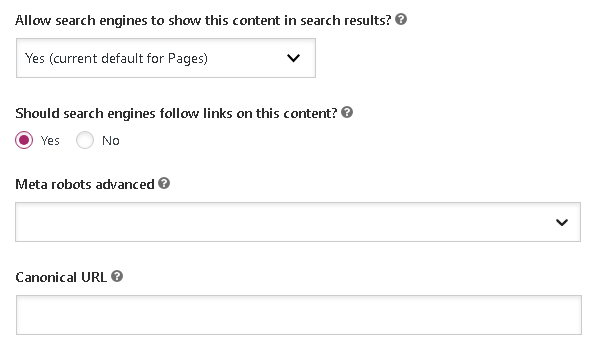

It's important to note that the robots.txt file is not a tool for blocking pages from being indexed by search engines.

To keep a page out of search results, you should use the noindex meta tag or password-protect the page. The robots.txt file can only prevent the crawling of specified pages, but these pages may still be indexed if linked from other sources.

Importance of Robots.txt in SEO

The robots.txt file plays a huge role in SEO by guiding search engine crawlers on how to interact with your site.

Properly configured, it can enhance crawl efficiency, and make sure that search engines focus on your most important pages.

Prioritisation helps in better indexing of key content, which can improve your site's search engine performance.

Moreover, robots.txt helps prevent the indexing of duplicate or low-value content, which can dilute your site's SEO effectiveness.

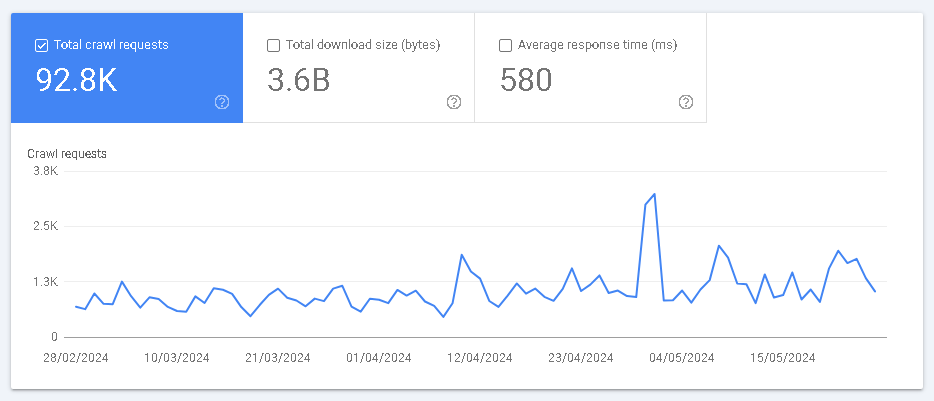

Another significant benefit of robots.txt is its ability to manage crawler load.

Large websites with extensive content can suffer from excessive crawler traffic, which can slow down the server. By using robots.txt, you can control the crawl rate and make sure that your server remains responsive to user requests.

For e-commerce sites or large content-driven platforms where user experience is everything, this matters a lot.

However, it's important to understand the limitations of robots.txt. Not all crawlers obey the rules set in this file, and some might ignore them entirely. Therefore, for critical content that must remain private, relying solely on robots.txt is not advisable.

Additional security measures like password protection or more solid indexing controls should be employed.

Lastly, while robots.txt is a valuable tool for SEO, it should be used judiciously. Overusing it to block pages can lead to important content being missed by search engines.

Therefore, regular audits and updates of your robots.txt file are necessary so that your SEO goals and site structure remain solid.

Basic Syntax and Structure of Robots.txt

The syntax of a robots.txt file is straightforward. It consists of one or more groups of directives, each specifying rules for a specific crawler. Each group begins with a User-agent line that specifies the crawler the rules apply to. Following this are one or more Disallow or Allow lines that indicate which parts of the site the crawler can or cannot access.

Here’s an example of a basic robots.txt file:

User-agent: *

Disallow: /private/

Allow: /public/

In this example, the User-agent line with an asterisk (*) applies the rules to all crawlers. The Disallow directive prevents crawlers from accessing the /private/ directory, while the Allow directive permits access to the /public/ directory.

Another key directive is Crawl-delay, which specifies the number of seconds a crawler should wait between requests to the server. This can be useful for managing server load, especially for large sites.

User-agent: *

Crawl-delay: 10

The Sitemap directive is also important. It tells crawlers where to find your XML sitemap, which lists the URLs you want to be indexed. This helps search engines understand your site structure better.

Sitemap: https://www.example.com/sitemap.xml

Remember, the order of directives matters. Specific rules should be placed before general rules to make sure proper execution. For instance, if you have rules for a specific crawler and general rules for all crawlers, place the specific rules first.

Each directive should be on a new line, and comments can be added using the # symbol. This helps in maintaining clarity and understanding within the robots.txt file.

# Block all crawlers from the /temp/ directory

User-agent: *

Disallow: /temp/

Using these basic syntax rules, you can create a robots.txt file that effectively manages how crawlers interact with your site. Don’t forget that you need to test your file to verify it works as expected.

How Do You Create A Robots.txt File?

Creating a robots.txt file is a simple process. Follow these steps to make sure it's done correctly and effectively:

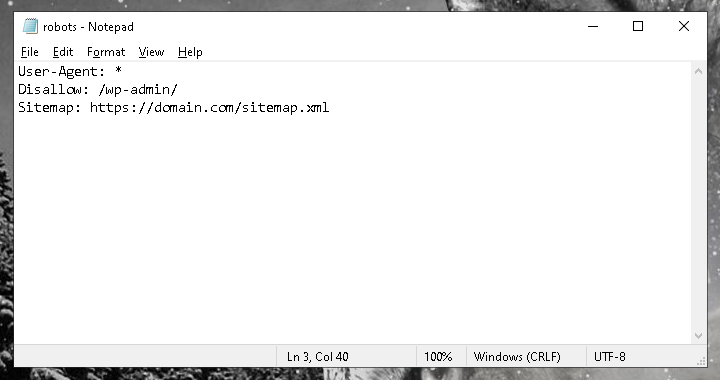

Step 1: Create the File

Use a plain text editor to create your robots.txt file. Avoid word processors, as they can add formatting that causes issues. Suitable editors include Notepad, TextEdit, or any other basic text editor. Save the file with the name "robots.txt" and make sure it's encoded in UTF-8.

Step 2: Add Rules to the File

Start by defining the user agents and specifying the directives for each. Here's a basic example:

User-agent: *

Disallow: /private/

Allow: /public/

Sitemap: https://www.example.com/sitemap.xml

This example blocks all crawlers from accessing the /private/ directory, allows them to access the /public/ directory, and provides the location of the sitemap.

Step 3: Upload the File

Place the robots.txt file in the root directory of your website. This is important for it to be effective. For instance, for www.example.com, the file should be accessible at www.example.com/robots.txt. If you're using a subdomain, make sure that the file is at the root of that subdomain.

Step 4: Test the File

Before finalising, test your robots.txt file to make sure it works as intended. Open a private browsing window and navigate to your file's URL to check its accessibility.

After uploading and testing, Google's crawlers will automatically find and use your robots.txt file.

Common Directives Used in Robots.txt

Understanding the common directives in a robots.txt file is essential for effective SEO management. These directives tell search engine crawlers what they can and cannot access on your site.

User-agent

The User-agent directive specifies which crawlers the rules apply to. An asterisk (*) means the rules apply to all crawlers. Specific user agents can also be targeted, such as Googlebot.

Example:

User-agent: *

User-agent: Googlebot

Disallow

The Disallow directive prevents crawlers from accessing specific pages or directories. It must start with a forward slash (/) to indicate the root.

Example:

Disallow: /private/

Disallow: /temp/file.html

Allow

The Allow directive permits access to specific pages or directories within a disallowed section. This is useful for allowing access to certain files while blocking others in the same directory.

Example:

Allow: /public/

Disallow: /public/private.html

Crawl-delay

The Crawl-delay directive specifies the number of seconds a crawler should wait between requests. This helps manage server load.

Example:

Crawl-delay: 10

Sitemap

The Sitemap directive indicates the location of your XML sitemap. This helps search engines find and index your site's content more efficiently.

Example:

Sitemap: https://www.example.com/sitemap.xml

These common directives form the foundation of a well-structured robots.txt file. By using them correctly, you can control crawler access, optimise crawl efficiency, and improve your site's SEO performance. For more detailed guidance, refer to Google's official documentation.

Final Thoughts

Effective use of robots.txt is a fundamental aspect of SEO.

Search engines crawl and index your site as intended.

While it helps manage crawler traffic and protect server resources, it’s just one piece of the SEO puzzle.

At Marketix Digital, being the leading SEO agency in Sydney, we offer comprehensive technical SEO audit services. Our audits leverage the precise C.R.I.C.S (Crawl, Render, Index, Cache, Serve) framework to uncover critical insights and strategies for superior rankings.

Would you like to see a sample of how we optimise a website's technical aspects to enhance SEO performance? Contact us today!

Recent Posts

Free Download SEO Book

Download our 24-page SEO book to learn:

- How SEO Really Works

- How to Rank #1

- Content & SEO

- Choosing an SEO Agency

Thank you!

You have successfully joined our subscriber list.

Recent Posts